How to turn ChatGPT into your best pipeline source

A playbook for making AI discovery the best new channel for high-intent leads

As much as we talk about AI in go-to-market, the reality is that 85% of enterprise sellers still manage their book in spreadsheets. Only 5% use CRM.

Deal data sits in Salesforce. Calls are in Gong. Content is scattered everywhere. It’s no wonder spreadsheets seem like the only unification layer.

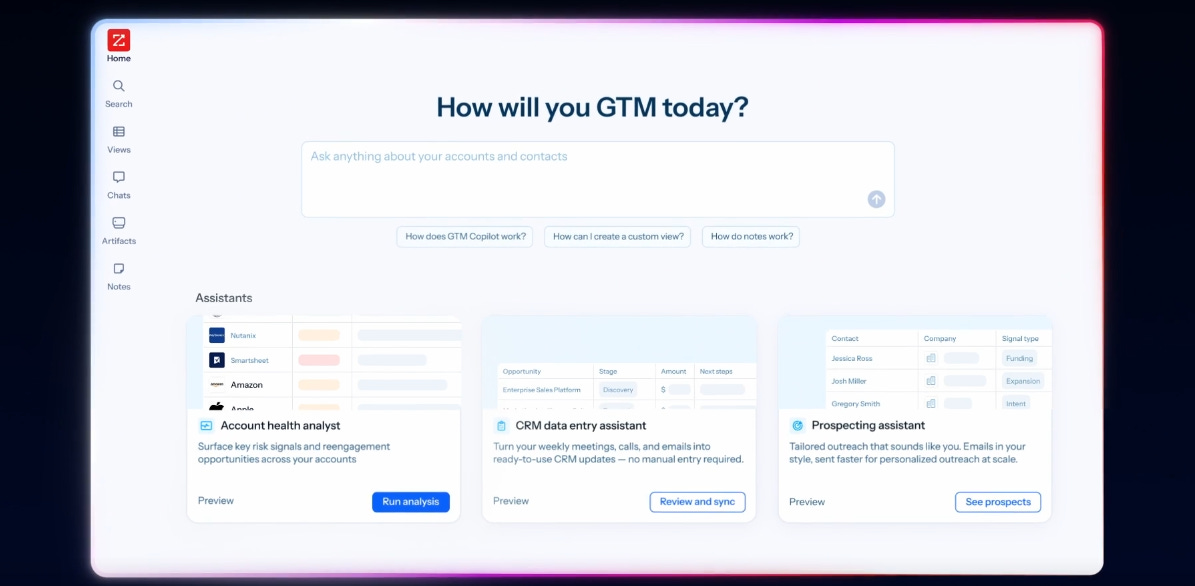

ZoomInfo built the new ZoomInfo Copilot Workspace to finally fix this. It’s one hub with buyer context, CRM data, and intelligence on 100M+ companies and 500M+ contacts. No more tab-hopping or spreadsheet mishaps.

Execution wins deals. Everything else is just preparation. Learn more here.

There’s one GTM channel that nearly everyone seems to be investing more in. It’s AI discovery, also known as answer engine optimization (AEO) or AI SEO.

I wrote a primer about the topic in July. The piece is already my most-read newsletter of the year. Since then, ChatGPT usage is reportedly up to 800 million weekly active users; a material increase from 500 million at the end of March.

Despite (or because of) the exploding interest, there’s a near-infinite amount of snake oil and self-interested advice. But there are very few tactical playbooks and case studies featuring real B2B companies seeing real results. I put out a call for examples and got connected to Docebo, a publicly traded learning management system (LMS).

AI discovery now accounts for 12.7% of Docebo’s high-intent leads, up 429% over the past year. Docebo has done this with just one team member, Valeriia Frolova, dedicated to both SEO and AEO efforts. I spent time with Valeriia and VP of revenue marketing Silvia Valencia to distill their exact learnings from the past year.

Here are four practical takeaways to improve your AI search visibility:

Measure your AI search performance through self-reported attribution, branded search traffic, and share-of-voice in AI queries.

Late-funnel SEO is the foundation for great answer engine optimization (AEO).

The dirty work of AEO has to get done: updating, formatting, and structuring content for LLMs.

You can see tangible AEO results with a modern tech stack.

1. Measure your AI search performance through self-reported attribution, branded search traffic, and share-of-voice in AI queries.

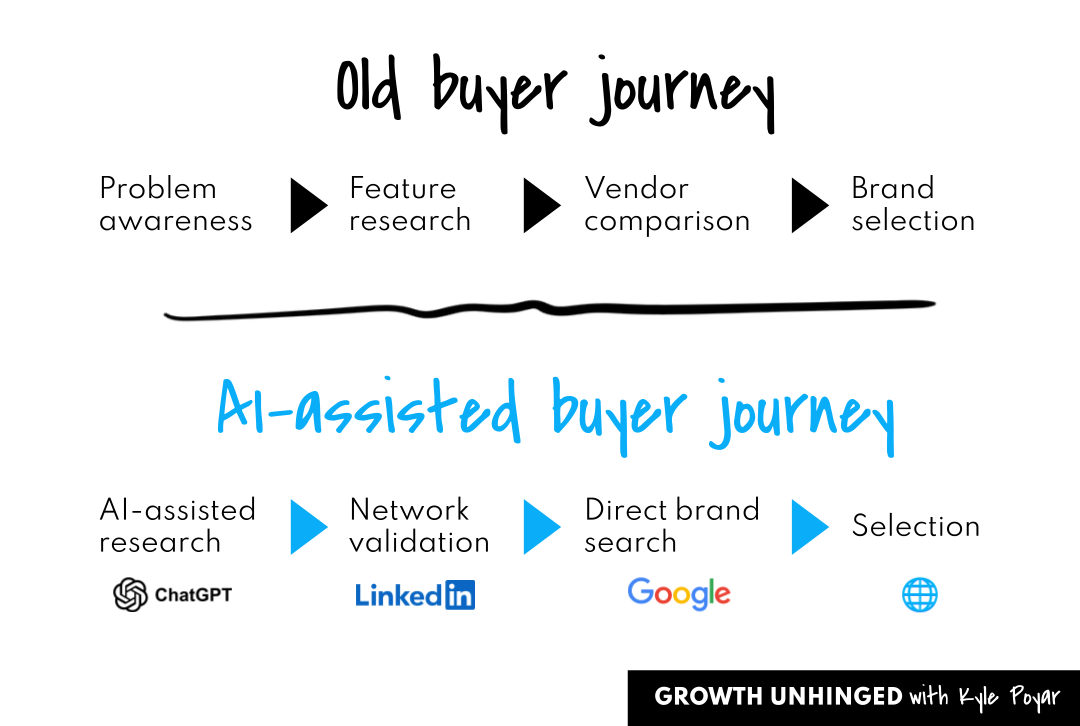

Brand search is becoming the only high-intent signal that matters, Silvia told me. Buyers aren’t necessarily searching for “learning management system” these days. They’re going to ChatGPT or Gemini for recommendations, validating options with their peers, and then searching brands directly.

“LLMs have made the dark funnel even darker,” Silvia said. “Prospects are building complete vendor shortlists without ever visiting a website. Search is becoming like every other channel — users consume content in-platform without clicking through. The same way LinkedIn posts drive pipeline without website visits.”

This shift means brand metrics become your growth metrics. The top two that Silvia and Valeriia obsess over: branded search volume and share-of-voice in LLM queries.

When the Docebo team examined the latest search traffic behavior, they saw that right now only 15% of search clicks belong to a non-branded topic. This is down dramatically from last year when non-branded topics were about 35% of all search clicks. In other words, 85% of folks that visit Docebo’s website from Google already know about Docebo and are actively seeking it out.

This is driven by two factors: (a) an uptick in brand discovery from AI and (b) a drop in clicks because of things like AI overviews. In fact, Docebo sees a 26% reduction in clicks whenever an AI overview appears in a search result.

Click-based attribution methods might lump all search traffic together, even if the search behavior isn’t really what influenced the buyer. Docebo tries to get to ground-truth by asking customers directly with a mandatory “How did you hear about us?” field on their demo booking page. (As a side note, LLMs are great at sorting through and categorizing this free text data.)

Their data now shows 12.7% of high-intent leads in Q3 2025 specifically mentioned AI tools as their discovery source. This is up 5x compared to Q3 2024 and 10x compared to Q1 2024.

This gives Docebo visibility into whether AEO is driving pipeline, but it’s not particularly actionable at helping the team prioritize where to invest within AEO. For that they look at share-of-voice compared to the competition for the top AI search queries that Docebo cares the most about. And Docebo has the highest share of voice (25%) in their category; the next competitor stands at 18%. (They use an AI monitoring tool called Xfunnel).

2. Late-funnel SEO is the foundation for great answer engine optimization (AEO).

Many of the companies that are feeling the most SEO pain right now are those who’ve attracted a bunch of broad, top-of-funnel traffic. That’s traffic that is better served by an AI overview or simple answer from ChatGPT.

Docebo wanted to rank for things that an AI overview couldn’t easily replace. In late 2023 they targeted specific money keywords that represented an enterprise buyer in late-funnel consideration. There are around 200 of these keywords that are most important to Docebo. These topics are relevant across SEO, AI overviews, and LLMs and they’re oriented around Docebo’s key industry verticals, solution offerings, and customer pain points.

It turns out that these money keywords aren’t easy to find. Search analytics platforms like Ahrefs often say there’s near-zero search volume. How to find your hidden money keywords:

Analyze discovery call transcripts. Upload all discovery call transcripts from the last quarter into ChatGPT (or your LLM of choice). Ask AI for the most common themes around what people were asking for and why they decided to evaluate a new vendor.

Cross-check with external search platforms. Docebo used Ahrefs to get external signals on what people were searching for. (While this missed some long-tail queries, it was still helpful for higher volume terms.)

Look at the existing content around these terms. From there, Docebo pulled up the search results to see whether competitors were already ranking for these terms and whether the top-ranking content was actually useful.

Look at what LLMs and AI overviews are saying about the topic. Again, the goal was seeing gaps where Docebo could move into pole position.

This is a thorough and time-consuming process, to be sure. But LLMs and third-party platforms help automate the work. And the upfront work means less wasted effort later on.

3. The dirty work of AEO has to get done: updating, formatting, and structuring content for LLMs.

It’s easy (and fun) to talk about the strategy of showing up in AI search. But let’s be clear: the dirty work of AEO has to get done if you want to see results.

Valeriia shared an example of an AI discovery optimized piece and exactly what she did to make it stand out. (Here’s the link if you want to see it for yourself.)

Keep reading with a 7-day free trial

Subscribe to Kyle Poyar’s Growth Unhinged to keep reading this post and get 7 days of free access to the full post archives.