👋 Hi, it’s Kyle. Welcome to another free edition of Growth Unhinged, my newsletter that explores the unexpected behind the fastest-growing startups.

I’ve long looked up to Miro — the visual collaboration company valued at $17.5B — as one of the absolute best PLG businesses. Their user onboarding is particularly 🔥 and is emulated by companies large and small.

But I never knew the story behind how and why Miro’s user onboarding has changed. Kate Syuma, Head of Growth Design, joins the newsletter and reflects on their evolution from 2017 (50 people) to present day (1,800 people).

🎁 Want to go deeper into user-centric PLG with Kate? Join her new interactive course on Maven and get $100 off with code KYLExMAVEN. (Explore the full Growth Unhinged x Maven collection here.)

I joined Miro in 2017 and became the first Product Designer for the Growth team. Over six-plus years, we experienced exponential growth:

The company grew from 50 to nearly 1,800 people

The user base scaled from 1 to more than 50 million

My team scaled from 1 to 11 Product and Content designers

As a Head of Growth Design, I decided to look back at how user onboarding evolved over time at Miro and reveal our approaches and key learnings.

In this post, I will share the evolution of Miro’s approach to user onboarding and activation, divided into 3 phases: Startup, Hyper-growth, and the current Growth at scale phase. This will not be dedicated solely to the successful stories we are used to hearing. I intentionally want to also share the failures and learnings that eventually helped us get to a mature, iterative culture.

I interviewed a PM, Data Analyst, and Product Designer who worked on user onboarding and activation over time. Based on those learnings, I will share how we approached experimentation, resolved challenges, and changed design patterns and activation metrics during pandemic-era hyper-growth. Then, I’ll reflect on where we are today and what’s next.

I hope these insights will help product teams of different sizes and scales take a fresh look at their user onboarding and activation to unlock new perspectives to grow faster.

Phase 1: Startup [50 people, 1 million users]

When I joined Miro (formerly RealtimeBoard) in 2017, we started working on user onboarding right away. I would like to emphasize that onboarding is a foundation of all following funnel success (retention, monetization), and we were seeing the biggest drop in week 1 (W1) retention. At that time we were iterating on the sign-up journey and the user’s first experience with Miro. The direction was inspired by best practices:

Goal-oriented

Segmented by role

Guided, step-by-step experience

As we were rebranding in parallel, we decided to update our sign-up journey and make it more interactive, visual, and value-focused. As a designer, I was very satisfied with the outcome, and even more pleased with the qualitative user feedback.

We got the results. They were negative.

Sending email invites from the sign-up flow was lower for the new flow.

Roles completion was lower for the new flow.

Fewer users started their work from a template in the new flow.

We quickly learned that the interactive beautifications of the flow distracted users from completing the main tasks. As a designer, I was awakened from my traditional UI/UX-oriented perspective. We had to iterate on that — but in hypergrowth other bigger priorities shifted our team focus.

Looking back, I see that “smart iteration” on that experience could help us find the right trade-off without sacrificing the whole visual part of the experience that was created to show the value. I asked my then-colleague PM in growth to reflect on that together, and she shared some valuable advice for early-stage businesses focused on onboarding:

“I’d highlight two main learnings as a Product Manager: First one — every time when you’re running an experiment, you need to run user interviews after because data says what is happening, but only users could say WHY this is happening. You cannot succeed from the first time. It would be great to learn from users why it doesn't work and run 1, 2, or even 3 iterations, so you could get the most out of your hypothesis. Launch, learn from users, iterate — that matters a lot to achieve the business impact.”

Let’s see how we applied these learnings at Miro moving forward and started iterating smart on the onboarding flow. But before that, I’ll tell you what we learned from big investments in a difficult and intense period of hyper-growth.

Phase 2: Covid Hyper-Growth [150 → 1000 people, 3 → 10 million users]

In 2019, right after our freemium business model and Miro rebranding launched, another big external factor hit the world and the company — the pandemic. In six months, the company grew from 150 to 1,000 people, and our user base scaled from three to 10 million users. These were less tech-savvy customers who really needed Miro as a tool to continue doing their work efficiently and to stay connected with their colleagues. We learned that this new audience really needed to onboard quickly and the onboarding and tool were complex. We needed to simplify the first user experience.

With that refreshed activation perspective in mind, we started tackling the user onboarding problems to find impactful solutions. From the Product and Design perspective, we started thinking about how to innovate the onboarding experience and take into account the refreshed activation definition. It was a good moment to run a data research and regression analysis to synthesize our existing knowledge about activation and define Setup, Aha, and Habit moments. (Learn more about Miro activation in a bonus part at the end.)

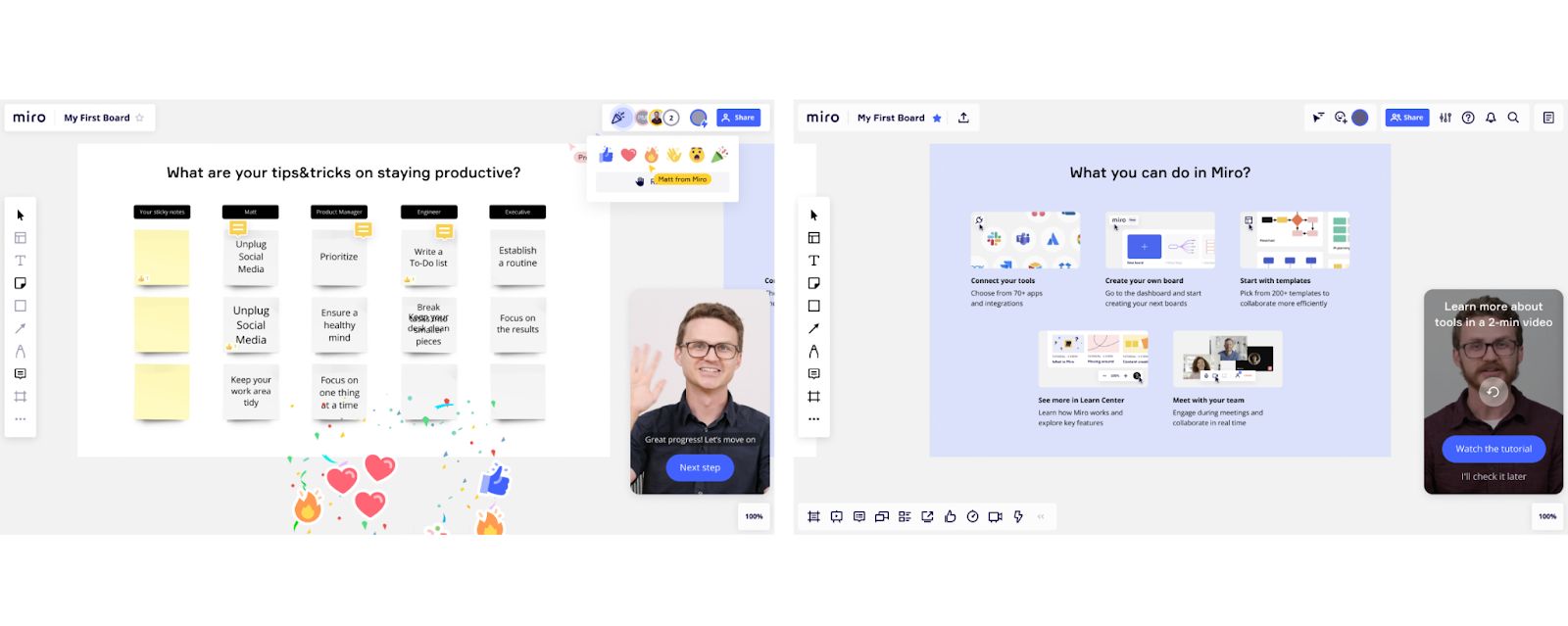

We always try to run qualitative user research in parallel with data analysis. We got additional insights from the user interviews: people didn’t know what they or their teams could do in Miro. We were positioning Miro as a “team-centric” product, which meant that collaboration was the core value on which we needed to deliver. This was the main user problem to solve with a new onboarding experience, and the team came up with a really innovative solution for that: a so-called “robo-collaboration” experience that guides users through the first experience in a human way. The team, leadership, and users were in love with that experience. (Fun fact: We user-tested this flow first with AI-generated videos using Synthesia two years ago, before AI became the new norm!)

It turned out to be a big bet for the team with over a quarter of investment in the research, design, and validation phase. As you can see from the images above, our Miro Academy manager Matt grew a beard over the course of recording the human video walkthrough, which happened in parallel with the simulation of the collaborative session.

When the team got buy-in from leadership and a positive reaction from several user validation, we started building. The team managed to work it into the MVP and launch a test in 1.5 months. The results were controversial:

A quarter of users start a tutorial. The number was not so high for several reasons: users didn’t have time, had a specific task to do, knew how to use Miro, couldn’t watch with audio, or information wasn’t relevant.

We had a meaningful uplift in users who created content on the first session because the tutorial triggers users to take easy actions.

Still, no improvement on the aha moment.

The team realized that we needed to double down on personalization and localization. The second iteration showed traction:

Double the number of users started the tutorial compared to MVP1.

However, we had a big drop from the second tutorial step.

The team did a great job in moving the needle for the users who create content, but it didn’t lead to a higher number of collaborative sessions. We needed to zoom out and find the root cause, so we stopped iterating on this investment and started brainstorming other hypotheses. In the next section, I will reveal how the team drove the needle at the aha moment through smart iterations.

Phase 3: Growth at scale and the power of smart iterations [~1,800 people, ~50 million users]

As the product grew, it became more and more complex to iterate with different parts of it. The team had to become more mature and thoughtful about experimentation and use the art of connecting the dots between qualitative and quantitative learning.

To uncover more in-depth insights, our UXR team ran “Diary studies” to explore the behavior of prospective users as they trialed Miro for 28 days. We wanted to uncover the main blocker for early churn and activation.

The team analyzed anonymized user behaviors around initial use and early collaboration patterns to help drive deeper understanding of what to emphasize during the first experience.

That established the foundation from which we started exploring hypotheses for new activation experiments and looked deeper into the experiences of two main segments — Creators and Joiners.

The team started focusing on the experience for Joiners as one of the audiences on which to double down. Our hypothesis was: If we break the ice for new board joiners, nudging them to perform an easy, simple, and delightful collaborative action that removes their fear of engaging with a new tool, we’ll increase the aha moment.

Next, we needed to define that easy action that could drive delight and stickiness to the product. It wasn’t that difficult to uncover that Miro reactions provide this simple and delightful effect.

Based on that insight, the team created the solution where new joiners were triggered to “Say Hi” on a board using a reaction. Another collaborator would receive a notification about their reaction, thus encouraging them to start collaborating on the board.

However, the first iteration didn’t yield any stat-sig results at the aha moment. The team had to dive deeper into what didn’t work and how to iterate further.

To do so, the team ran an in-depth post-analysis study that helped them uncover six theories and prioritize the main improvements for the next iteration, instead of doing a bigger pivot. Here are the main smart iterations the team uncovered:

Improve discoverability: Increase tip visibility by making the tip background dark

Decrease cognitive load: Trigger reactions right from the tip button and show a new tip indicating where they can find more reactions later.

After applying the two prioritized theories, the team iterated on that experience and added several improvements — making the tip more noticeable, simplifying reactions, and adding a reminder of where to find more reactions.

Without investing in post-validation user research, these small changes helped to achieve an uplift in the aha-moment, matching the prediction that was made.

Key tips on how to “iterate smart”

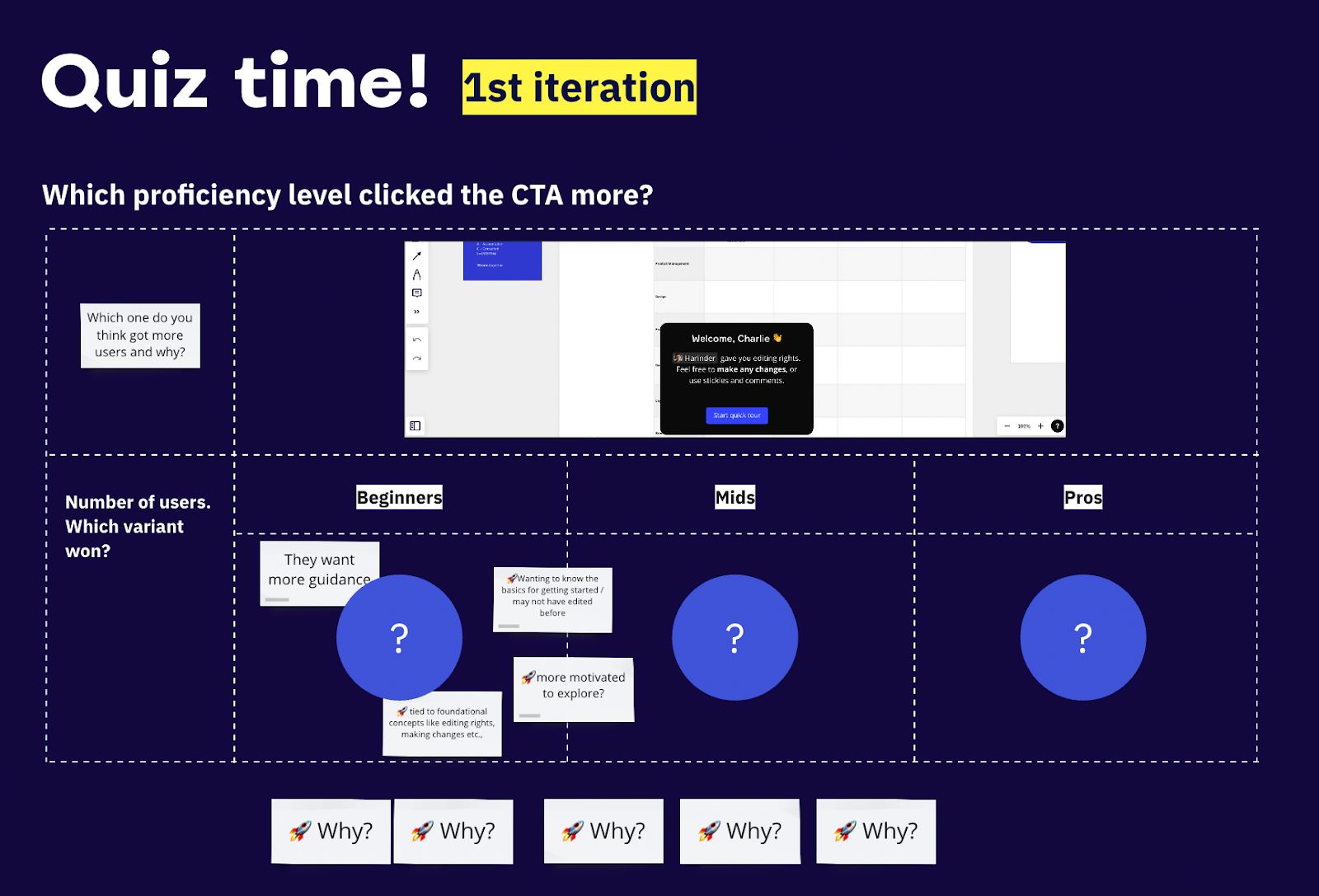

Having spent over six years on Growth teams at Miro, I think the most important and lasting learning I’ve gained is that we need to keep learning and iterate smart. On our Growth Design team, we reflect on each experiment and unpack the WHY behind the result. The “quiz workshop” format helps us uncover further iterations collaboratively — we look at the experiment and try to guess which variation was a winner and the “WHY” behind it, which helps us co-create future solutions with speed.

Here are five key tips to help your team iterate on your product onboarding flow with intention:

Run regular usability tests of your onboarding experience with users and non-users to build product sense muscle (2-3 per week).

Try to validate your big investments with smaller first iterations by decomposing them.

Once you get the first results, dive into the behavioral data post-analysis to map the theories, evidence, and solution ideas for iterations.

Reflect on your first iteration — try the “quiz workshop” format for your retrospective.

Always run a second iteration.

I hope this case study helps you improve your own user onboarding. Don’t give up after the first iteration, always ask why, and keep listening to your users.

Bonus: How Miro approached the activation metric

In order to build a well-performing onboarding experience, you need to measure each experiment properly. As Miro evolved, we formulated a solid definition of the activation metrics to reflect the longer-term inflection in retention. To build that, we ran a regression analysis along with qualitative and quantitative studies.

What is the goal of the activation metric at Miro?

The activation metrics should be the measurements of the early stages that a user needs to go through to successfully achieve a desired outcome. They are the path that links newly acquired users to their sustained usage in the future and, therefore, they measure the level of friction a user has while experiencing the product early on. For Miro, this means that we expect users to get out of the activation phase with a good grasp of our product core-value proposition and that this phase sets them up for long-term usage.

How did you determine the activation metric at Miro?

To make things easier and more manageable, we split the activation phase into three: setup, aha, and habit stages. We only consider a user fully activated when they successfully passed through all of them.

The setup moment is the first time a user gets in contact with the product.

The aha moment is when the user experiences our core-value proposition for the first time; it should capture the “click” in a user's mind when they think, “Cool, I understand why this is useful.”

And finally, we consider someone fully activated when they do this core action repeatedly, within a given time period. It’s the habit moment.

At Miro, we uncovered those moments by using a two-way approach: quantitative and qualitative.

On the quantitative side, we looked at users that had a high retention rate for a long period of time. They were our target. We looked for anonymized patterns in their usage and saw significantly different actions from users that had a low retention rate. We used insights from the qualitative approach to guide and refine our analytical methodology by having several user interviews to understand, from their perspective, what frictions they encountered while using Miro for the first time. After all, we always need to have user-centric metrics that cover true pain points.

Lastly, when we defined the set of activation metrics, we back-tested it against different user groups and cohorts and checked if they reflected what we expected to observe. We also checked the coverage of this metric against our set of targeted users and benchmarked its values against industry standards.

For example, we had several candidates for habit metrics that didn’t quite explain or correlate with our target audience or were too complicated to explain and measure. Simplicity is also key in this context, as this is a set of metrics that, ideally, is well understood by the entire company. What is interesting is how this metric informed us of our current issues and frictions within the onboarding process and how it helped to steer decisions on this front, paired with good experimentation techniques.

Looking back, what advice would you give to teams who are trying to define or rethink their Activation metric?

It’s very important to have a few things clearly mapped and defined before jumping on activation definitions:

What is the core value that users need to experience?

What is your desired outcome? It could be retention, high frequency, or monetization. It’s important to define this because your selected audience will be derived from this outcome.

What are the frictions/successes the users experience on their own perceptions? Sometimes your understanding of those differs from the users — and this bias, if not double-checked, will be carried into the metrics’ definitions.

Lastly, don’t get too attached to activation frameworks. There are several “how-tos” that can guide and serve as inspiration, but there’s no universal recipe for success that is applicable to everyone. Trying to replicate and apply methodologies exactly as they’re taught will only delay the team. Find what works for you and stick to it :)