👋 Hi, it’s Kyle Poyar and welcome to Growth Unhinged, my weekly newsletter exploring the hidden playbooks behind the fastest-growing startups.

“Hey, can we test this?” If those five words sent a shiver down your spine, today’s guest post might be for you. The tech industry has a borderline obsession with testing. In my experience, the vast majority of this testing is garbage-in, garbage-out — leaving us with few new insights and a lot of wasted effort.

My friend, and past contributor, Casey Hill recently joined DoWhatWorks as CMO. DoWhatWorks removes the guesswork from website optimization by analyzing growth experiments across 2,000+ companies (they’re tracking 25,000 tests and counting). Casey chimes in this week to share exactly what he’s learned from all that data.

I’ve worked in software for the past 15 years, at Techvalidate (acquired by SurveyMonkey), ActiveCampaign, and now as CMO of DoWhatWorks. While each experience was different, there was one consistency: A/B testing.

At ActiveCampaign, we tested everything from email subject lines, to website copy to onboarding product flows (and I wrote about some of what we learned). Now at DoWhatWorks, I have the good fortune of sitting on a dataset of more than 25,000 A/B tests run across the most beloved brands out there. We track everything from specific companies to page types, sector, elements tested, recency, etc.

The more time I spend around testing, the more I’m convinced that A/B testing is broken. First off, only 10% of A/B tests tied to revenue beat their controls. This means we’re not only spending an untold amount of time, energy and money on tests that don’t work; we’re actually losing millions of dollars putting these tests in front of our audience only to see conversion suffer.

The problem is actually much more insidious, though. Brands are copying losing versions of their competitors’ sites – meaning the same tests keep failing again and again. Teams are blindly copying ‘best practices’, which turn out to be what's most popular and not necessarily what’s most effective. And when brands do finally find a winner, they aren’t implementing it.

Let’s unpack why our A/B testing system is broken and, more importantly, what to do about it.

Fail #1: Teams are copying what’s common versus what’s winning

There is a herd mentality when it comes to certain aspects of web design. But oftentimes, when tested head to head, these ‘best practices’ lose.

For years, in B2B SaaS, the ‘best practice’ was always one CTA in the hero. But more and more, when top brands test one vs. two CTAs head to head, the multiple CTA’s are winning. I’ve seen this with Slack, Shopify, Intercom, Loom and many others.

In my experience, what likely dictates the best path for a brand is based on (a) how homogenous your traffic is and (b) whether your product is meant for self-service, enterprise or both. The average B2B SaaS business has a diverse traffic base and is trying to appeal to both self-service and enterprise segments.

Another legacy best practice: simple backgrounds convert better on checkout or register pages. In B2B SaaS, we find the opposite is often true. Below is a split test from Canva, where the illustrated background was the clear winner.

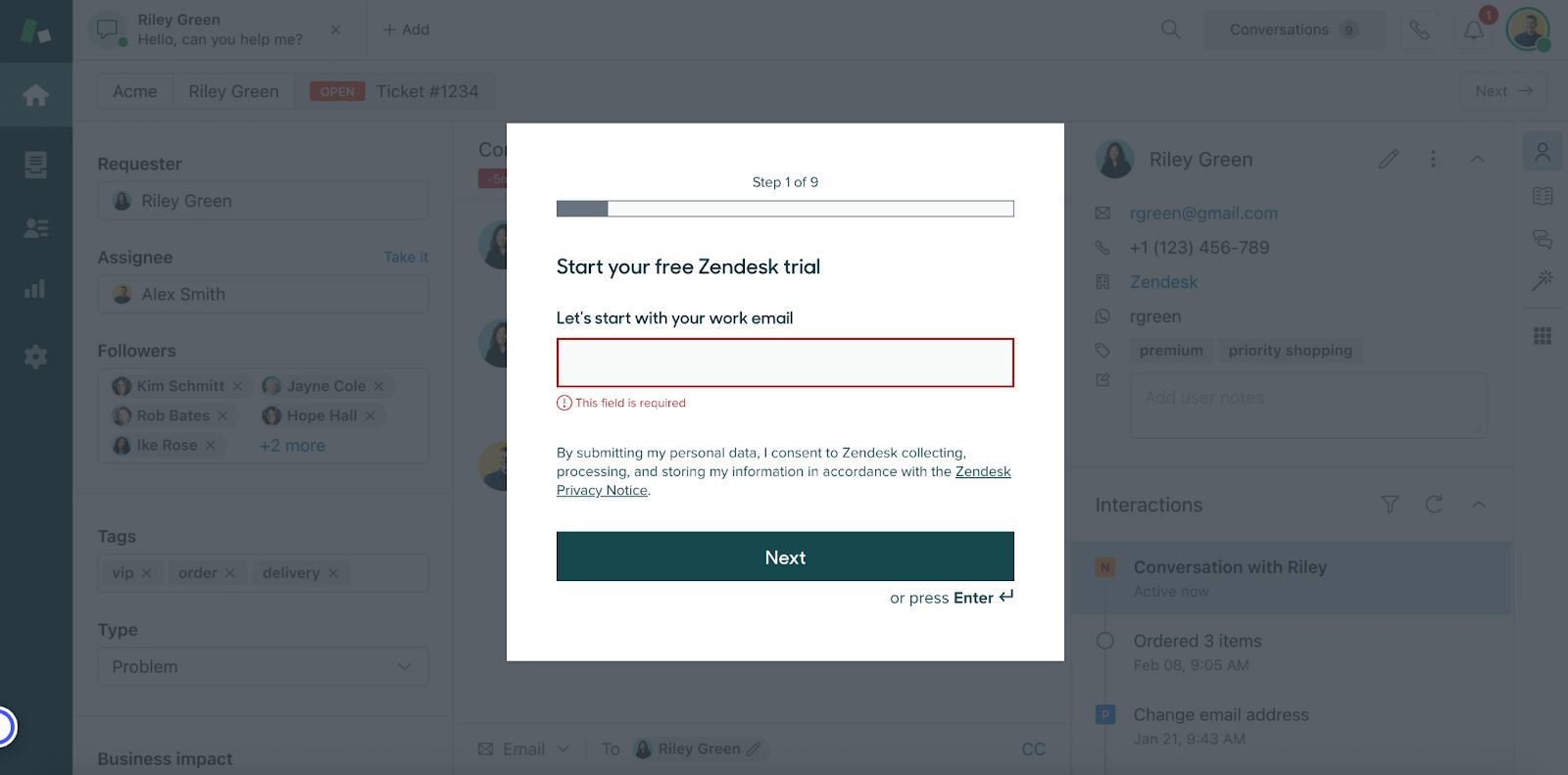

I’ve been seeing plain backgrounds lose out for dozens of other SaaS products, from Zendesk to Gorgias.

An emerging SaaS strategy is to use a semi-opaque background showing the product. of the fantastic newsletter did a detailed analysis on this very thing. He found huge lifts for the semi-opaque background variant: a 25% conversion lift for MineOS and 94% lift for MyCase.

Fail #2: Teams are leveraging outdated experience

Subscribe to Kyle Poyar's Growth Unhinged to read the rest.

Become a paying subscriber of Growth Unhinged to get access to this post and other subscriber-only content.

UpgradeA paid subscription gets you:

- Full archive

- Subscriber-only bonus posts

- Full Growth Unhinged resources library