👋 Hi, it’s Kyle Poyar and welcome to Growth Unhinged, my weekly newsletter exploring the hidden playbooks behind the fastest-growing startups.

AI fluency is the skill of 2025. It’s becoming a part of performance reviews. It’s being evaluated in the hiring process. Meta is even letting candidates use AI during coding tests.

But being AI fluent (should we pronounce it like affluent?) is highly context dependent. While an engineer might need to be familiar with AI coding assistants (and their limitations), a recruiter might need to find ways to accelerate resume screens (while minimizing bias) and a support team member might need to use AI to audit support docs for agents and LLMs.

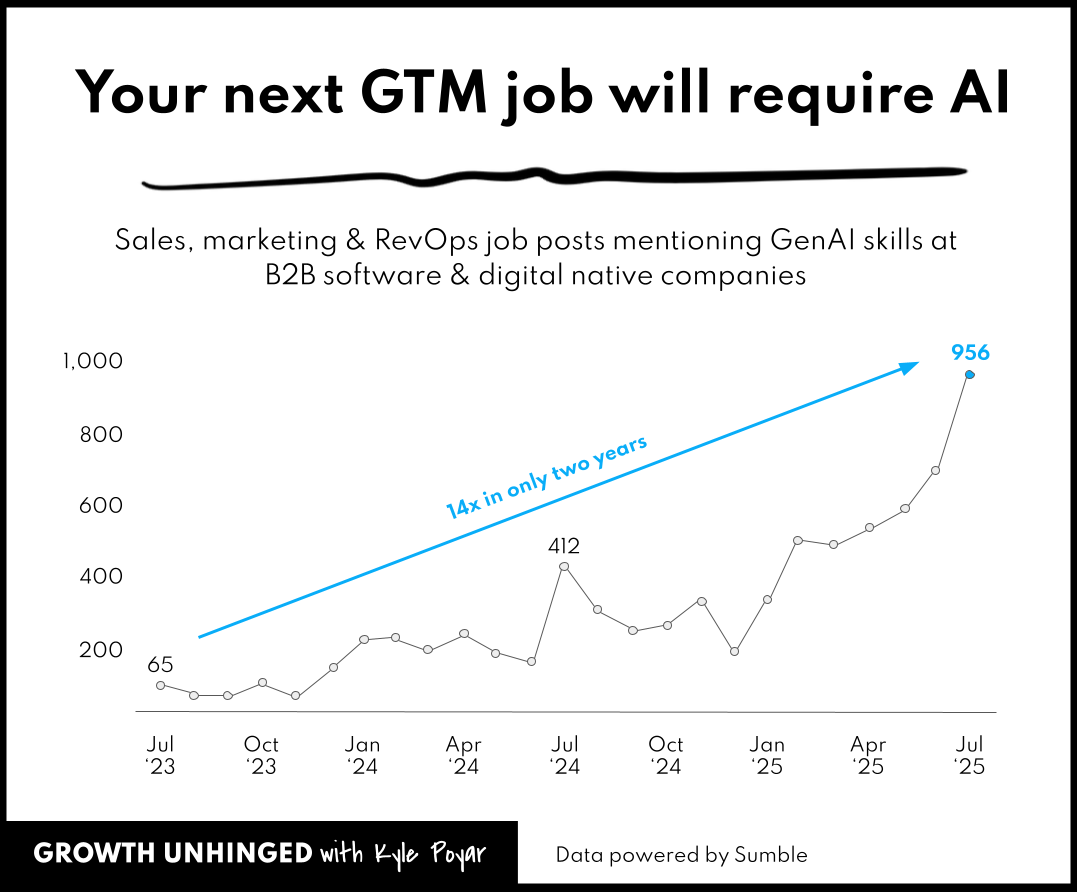

Looking at tech GTM roles, the number of job posts requiring AI skills has exploded in recent months. It increased from a mere 65 in July 2023 to nearly 1,000 in July 2025. The data comes from Sumble and you can explore all the aggregated job posts for yourself here.

I was shocked at how varied the specific job titles and requisite AI skills actually were. These jobs do include next-gen roles like growth/GTM engineers, but also social media producers, paid search specialists, BDRs, marketing analysts, content marketing managers, product marketers and CMOs. And the AI skills ranged from beginner (ex: “working proficiency of AI tools like ChatGPT”) to highly sophisticated (ex: “skills in using AI copilots and agents to personalize messaging, automate outreach, and surface insights from CRM data”).

Clearly, AI skills are in demand. But how do you figure out who has AI skills? And, if you’re thinking about changing jobs, how can you prepare yourself to demonstrate AI skills in an interview?

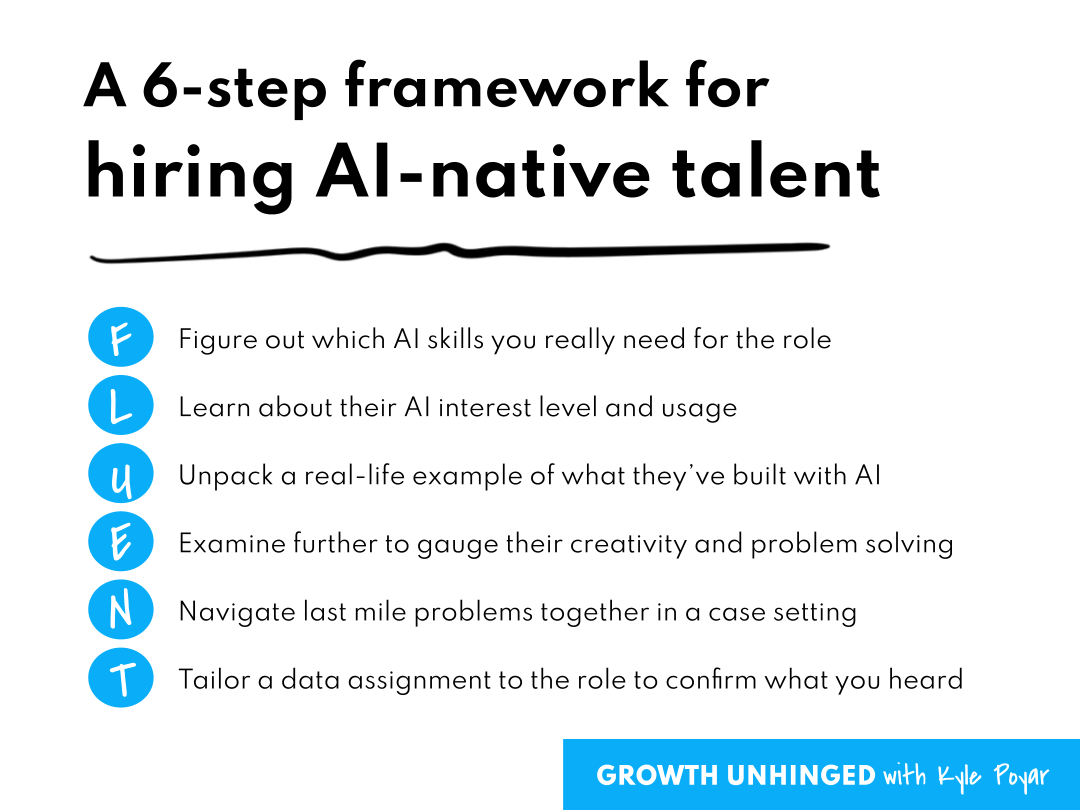

To help, I’ve put together the six-step FLUENT framework for hiring AI-native talent. This comes from polling readers (thank you!) along with some of my favorite GTM leaders who’ve been actively hiring AI-native folks at places like Clay, ClickUp, Wiz, Vercel and Zapier.

Step 1: Figure out which AI skills you really need for the role

Some jobs require systems-level thinkers who can build complex, multi-step workflows with AI agents and first-party data. Most don’t.

Your first step is to figure out which AI skills you really need for the specific job and which are simply nice-to-have.

Zapier has a helpful framework for how they think about evaluating AI skills across roles including specific examples of what separates a capable from transformative hire. I’ve adapted their framework slightly (below) for GTM roles.

Unacceptable: Resistant to AI tools and skeptical of their value.

These are candidates who make little or no use of AI tools beyond ChatGPT. When pressed, they default to older playbooks or manual ways of doing things.

Capable: Using the most popular tools, with likely under three months of hands-on experience.

These folks use AI on perhaps a weekly basis for work related to their role. They’re curious about AI tools and eager to experiment with advanced functions when given the opportunity or training. They understand some of the limits of AI and have started to think through how to adapt to those (ex: human-in-the-loop review process).

Adoptive: Embedding AI in personal workflows, tuning prompts, chaining models, and automating tasks to boost efficiency.

Their AI use should begin to have a meaningful productivity impact for their specific role. They’ve brought AI into at least one complex, multi-step workflow like an AI agent or a custom GPT. And they can readily identify processes or even roles that could be fully automated with AI.

Transformative: Uses AI not just as a tool, but to rethink strategy and deliver user-facing value that wasn't possible a couple years ago.

Their AI use should have a meaningful business impact across their team. At this level folks are using AI to build teammates and run multiple workflows in parallel. They have demonstrated an ability to solve complex, last mile problems of getting AI to work for important processes. And they champion AI adoption beyond their specific role, bringing it to other teams or cross-functional workflows.

While in all cases you want to avoid anyone who’s unacceptable, for junior or entry-level roles you can be OK with someone who’s capable and eager to experiment. For more experienced ICs or Ops-focused roles, you’ll likely want to raise the bar to either adoptive or transformative depending on the context.

Step 2: Learn about their AI interest level and usage

“People think you need a big grand vision for how to adopt AI. The reality is that you want as many people playing with this on a personal productivity level as possible,” Phil Lakin told me.

Phil leads enterprise innovation at Zapier where he helps enterprises adopt AI and automation. When hiring at Zapier, which says that 89% of its employees are now ‘AI-fluent’, Philip looks for candidates who are innately curious about AI. “Tools are making anything possible so we’re looking for people who are curious, interested, scrappy builders who want to try new stuff,” he said.

For Phil this means looking beyond the obvious “have you used ChatGPT?” question. His top three interview questions are instead:

What’s something you rebuilt from scratch after AI changed how you’d approach it? I want to see if they’re rethinking systems, not just adding a chatbot to their workflow.

Tell me about a moment when you realized AI made a workflow or role obsolete. Shows if they’ve zoomed out and connected real dots.

If I gave you a full-time AI engineer tomorrow, what would you have them build? This one is my favorite. It tells me if the candidate is playing offense or just watching the show.

Meg Scheding, a fractional product marketing and GTM leader and former product marketing lead at Brex, uses a similar line of questioning. She asks:

What is the most frustrating use case for AI that you’ve come across? It shows me how much they’re tinkering or playing with the tools. Curiosity (and not fear) about AI is one of the biggest indicators of successful adoption in my opinion.

Yash Tekriwal, the first GTM engineer at Clay, explores AI usage by probing about nuances that folks learn over time if they’re serious about AI adoption:

What is the structure of a great prompt? Does it change at all based on the model you're selecting or the task to be completed?

What's an example of something that AI is very good at doing, and something that it's not very good at doing? Bonus points for real examples from work experience.

Step 3: Unpack a real-life example of what they’ve built with AI

Subscribe to Kyle Poyar's Growth Unhinged to read the rest.

Become a paying subscriber of Growth Unhinged to get access to this post and other subscriber-only content.

UpgradeA paid subscription gets you:

- Full archive

- Subscriber-only bonus posts

- Full Growth Unhinged resources library